Welcome to the nano faux-drone zone!

[Via Tony Scott]

Former Apple designer Tuhin Kumar, who recently logged three years at Luma AI, makes a great point here:

We simply have not started rethinking interactions from the grounds up.

So many possibilities wide open when you think of human – AI in micro feedback loops vs automation alone or classic back and forth. https://t.co/iVKb02SbdU

— tuhin (@tuhin) February 18, 2026

To the extent I give Adobe gentle but unending grief about their near-total absence from the world of UI innovation, this is the kind of thing I have in mind. What if any layer in Photoshop—or any shape in Illustrator—could have realtime-rendering generative parameters attached?

Like, where are they? Don’t they want to lead? (It’s a genuine question: maybe the strategy is just to let everyone else try things, and then to finally follow along at scale.) And who knows, maybe certain folks are presently beavering away on secret awesome things. Maybe… I will continue hoping so!

Supporting my MiniMe Henry’s burgeoning interest in photography remains a great joy. Having recently captured the Super Bowl flyover with him (see previous), I prayed that Monday’s torrential downpour in LA just might give us some spectacular skies—and, what do you know, it did! Check out our gallery (selects below), featuring one seriously exuberant kid!

I’ve also been enjoying Hen’s great eye for reflections, put to good use during our recent visit to the USS Hornet:

Hey, I’ve got a fun, quick question, said with love: where the hell is Adobe in all this…?

today, we’re announcing the acquisition of @wand_app and the release of our new iPad app.

Krea iPad integrates the best of both worlds: native iOS feel with custom brushes and real-time AI.

download it now pic.twitter.com/VNCf8eB9eK

— KREA AI (@krea_ai) February 12, 2026

It’s hard to believe that when I dropped by Google in 2022, arguing vociferously that we work together to put Imagen into Photoshop, they yawned & said, “Can you show up with nine figures?”—and now they’re spending eight figures on a 60-second ad to promote the evolved version of that tech. Funny ol’ world…

Real or AI rendered? Who even knows anymore, but either way these depictions are super well done. They even got the triumphal Riley Mills stomp!

View this post on Instagram

[Via Chris Davis]

MiniMe on the lens + Dad in Lightroom/Photoshop, making the dream work. 🙂

Bad To The B-ONE

Capturing yesterday’s #SuperBowl with MiniMe #B1 #F15 #F18 #F35 pic.twitter.com/FB96CmSUW3

— John Nack (@jnack) February 10, 2026

Check out our gallery for full-res shots plus a few behind-the-scenes pics. BTW: Can you tell which clouds were really there and which ones came via Photoshop’s Sky Replacement feature? If not, then the feature and I have done our jobs!

And peep this incredibly smooth camerawork that paired the flyover with the home of the brave:

View this post on Instagram

Through insanely good timing, I caught Friday’s practice flyover as the jets headed up to Levi’s Stadium:

Crazy luck heading home in my neighborhood tonight!

B-1, two F-15s, two F/A-18s, and two F-35s. Practicing for the Super Bowl flyover on Sunday. pic.twitter.com/X73Lv7aXJ6

— John Nack (@jnack) February 7, 2026

Right now my MiniMe & I are getting set to head up to the Bayshore Trail with proper cameras, as we hope to catch the real event at 3:30 local time.

Meanwhile, I’ve been enjoying this deep dive video (courtesy of our Photoshop teammate Sagar Pathak, who’s gotten just insane access in past years). It features interviews with multiple pilots, producers, and more as they explain the challenges of safely putting eight cross-service aircraft into a tight formation over hundreds of thousands of people—and in front of a hundred+ million viewers. I think you’ll dig it.

A couple of weeks ago I mentioned a cool, simple UI for changing camera angles using the Qwen imaging model. Along related lines, here’s an interface for relighting images:

Qwen-Image-Edit-3D-Lighting-Control app, featuring 8× horizontal and 3× elevational positions for precise 3D multi-angle lighting control. It enables studio-level lighting with fast Qwen Image Edit inference, paired with Multi-Angle-Lighting adapter. Try it now on @huggingface. pic.twitter.com/b3UrELE6Cn

— Prithiv Sakthi (@prithivMLmods) February 4, 2026

This new tool (currently in closed beta, to which one can request access via the site)

Martini puts you in the director’s chair so you can make the video you see in your head… Get the exact shot you want, not whatever the model gives you. Step into virtual worlds and compose shots with camera position, lenses, and movement… No more juggling disconnected tools. Image generation, video generation, and world models—all in one place, with a built-in timeline.

I can’t wait to try stepping into the set. Beyond filmmaking, think what something like this could mean to image creation & editing…

My former colleagues Jue Wang & Chen Fang are making an impressive indie debut:

AniStudio exists because we believe animation deserves a future that’s faster, more accessible, and truly built for the AI era—not as an add-on, but from the ground up. This isn’t a finished story. It’s the first step of a new one, and we want to build it together with the people who care about animation the most.

Check it out:

Introducing https://t.co/zxqLkGyNDh: the first AI-native Animation platform.

We’re still cooking, so… Repost & comment to join the beta (FREE access).#AdobeAnimate #AniStudio pic.twitter.com/diPJV1p2CW

— AniStudio (@AniStudio_ai) February 4, 2026

Seriously, I had no idea of the depth of this plugin for Photoshop (available via perpetual or subscription licensing). It offers depth-aware lighting, face segmentation, and much more. Check out this charming 3-minute tour from my friend Renee:

This is a subtle but sneakily transformative development, potentially enabling layer-by-layer creation of editable elements:

Awesome! I’ve been asking this of Ideogram & other image creators forever.

Transparency is *huge* unlock for generative creation & editing in design tools (Photoshop, After Effects, Canva, PPT, and beyond). https://t.co/UGJQVDuet5

— John Nack (@jnack) February 2, 2026

This new tech from Meta promises to create geometry from video frames. You can try feeding it up to 16 frames via this demo site—or just check out this quick vid:

Huge drop by Meta: ActionMesh turns any video into an animated 3D mesh.

Demo available on Hugging Face pic.twitter.com/dDh144uLuP

— Victor M (@victormustar) January 30, 2026

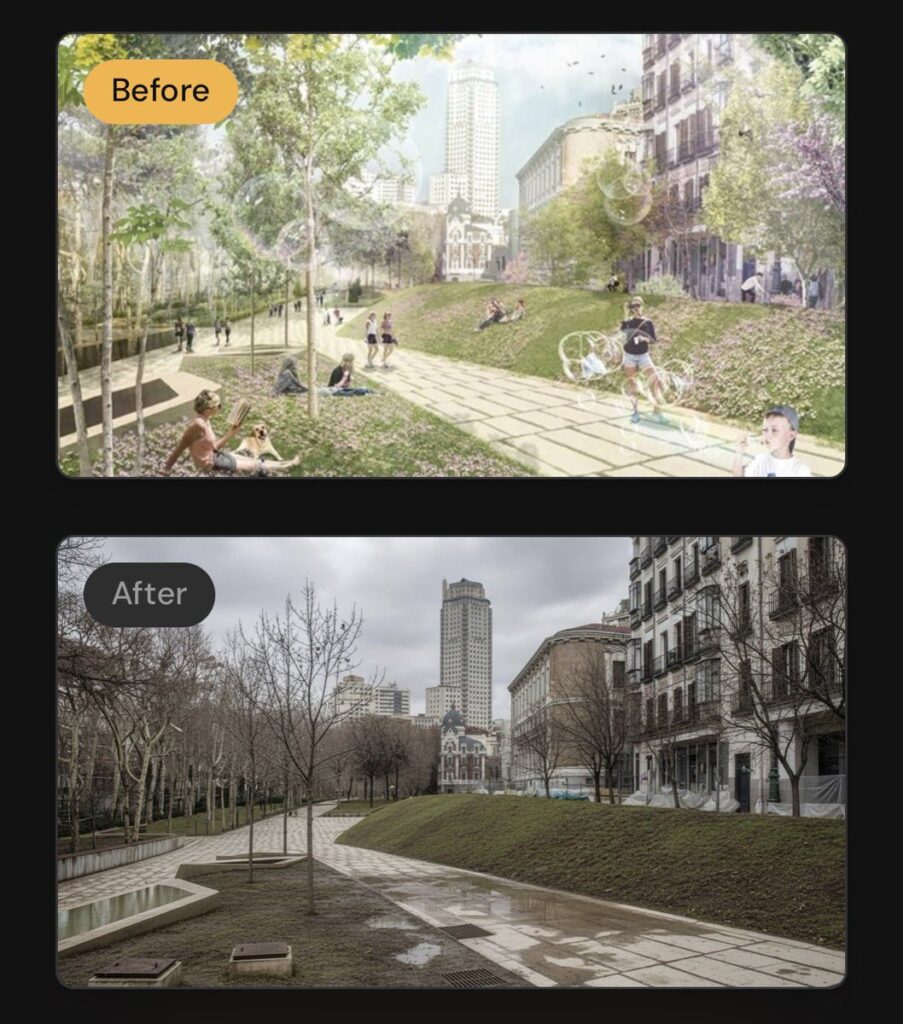

“Upload an architectural render. Get back what it’ll actually look like on a random Tuesday in November.” Try it yourself here.

As one commenter put it, “Basically a ‘what would a building look like in London most days’ simulator”—i.e. enbrittification.

I’m excited to learn more about GenLit, about which its creators say,

Given a single image and the 5D lighting signal, GenLit creates a video of a moving light source that is inside the scene. It moves around and behind scene objects, producing effects such as shading, cast shadows, secularities, and interreflections with a realism that is hard to obtain with traditional inverse rendering methods.

Video diffusion models have strong implicit representations of 3D shape, material, and lighting, but controlling them with language is cumbersome, and control is critical for artists and animators.

GenLit connects these implicit representations with a continuous 5D control… pic.twitter.com/ulo0BNOCTd

— Michael Black (@Michael_J_Black) December 15, 2025

I stumbled across some compelling teaser videos for this product, about which only a bit of info seems to be public:

A Photoshop plugin that brings truly photorealistic, prompt-free relighting into existing workflows. Instead of describing what you want in text, control lighting through visual adjustments. Change direction, intensity, and mood with precision… Modify lighting while preserving the structure and integrity of the original image. No more destructive edits or starting over.

Identity preservation—that is, exactly maintaining the shape & character of faces, products, and other objects—has been the lingering downfall of generative approaches to date, so I’m eager to take this for a spin & see how it compares to other approaches.

Check out this fun, physics-enabled prototype from Justin Ryan:

AirDraw on Apple Vision Pro is incredible when you toggle on physics.

Being able to interact with your 3D drawings makes them feel like they are actually in your room. pic.twitter.com/jFNf56f8eg

— Justin Ryan ᯅ (@justinryanio) January 27, 2026

Here’s an extended version of the demo:

The moment I switched on gravity was the moment everything changed.

Lines I had just drawn started to fall, swing, and collide like they were suddenly alive inside my room. A simple sketch became an object with weight. A doodle turned into something that could react back. It is one of those Vision Pro moments where you catch yourself smiling because it feels playful in a way you do not see coming.

Of course, Old Man Nack™ feels like being a little cautious here: Ten years ago (!) my kids were playing in Adobe’s long-deceased Project Dali…

…and five years ago Google bailed on the excellent Tilt Brush 3D painting app it acquired. ¯\_(ツ)_/¯

And yet, and yet, and yet… I Want To Believe. As I wrote back in 2015,

I always dreamed of giving Photoshop this kind of expressive painting power; hence my long & ultimately fruitless endeavor to incorporate Flash or HTML/WebGL as a layer type. Ah well. It all reminds me of this great old-ish commercial:

So, in the world of AI, and with spatial computing staying a dead parrot (just resting & pining for the fjords!), who knows what dreams may yet come?

Just yesterday I was chatting with a new friend from Punjab about having worked with a coincidentally named pair of teammates at Google—Kieran Murphy & Kiran Murthy. I love getting name-based insights into culture & history, and having met cool folks in Zimbabwe last year, this piece from 99% Invisible is 1000% up my alley.

Those crazy presumable insomniacs are back at it, sharing a preview of the realtime generative composition tools they’re currently testing:

YES! https://t.co/EOIBon8KPc pic.twitter.com/aNZtfsp2A1

— vicc (@viccpoes) January 22, 2026

This stuff of course looks amazing—but not wholly new. Krea debuted realtime generation more than two years ago, leading to cool integrations with various apps, including Photoshop:

My photoshop is more fun than yours With a bit of help from Krea ai.

It’s a crazy feeling to see brushstrokes transformed like this in realtime.. And the feeling of control is magnitudes better than with text prompts.#ai #art pic.twitter.com/Rd8zSxGfqD

— Martin Nebelong (@MartinNebelong) March 12, 2024

The interactive paradigm is brilliant, but comparatively low quality has always kept this approach from wide adoption. Compare these high-FPS renders to ChatGPT’s Studio Ghibli moment: the latter could require multiple minutes to produce a single image, but almost no one mentioned its slowness. “Fast is good, but good is better.”

I hope that Krea (and others) are quietly beavering away on a hybrid approach that combines this sort of addictive interactivity with a slower but higher-quality render (think realtime output fed into Nano Banana or similar for a final pass). I’d love to compare the results against unguided renders from the slower models. Perhaps we shall see!

Apple’s new 2D-to-3D tech looks like another great step in creating editable representations of the world that capture not just what a camera sensor saw, but what we humans would experience in real life:

Excited to release our first public AI model web app, powered by Apple’s open-source ML SHARP.

Turn a single image into a navigable 3D Gaussian Splat with depth understanding in seconds.

Try it here → https://t.co/USoFBukb30#AI #Apple #SHARP #VR #GaussianSplatting pic.twitter.com/aplWoEcesb

— Revelium™ Studio (@revelium_studio) January 9, 2026

Check out what my old teammate Luke was able to generate:

made a Mac app that turns photos into 3D scenes using Apple’s ml-sharp: https://t.co/bU8FxJ5lXk pic.twitter.com/fNwbS9gYns

— Luke Wroblewski (@LukeW) December 18, 2025

Almost exactly 19 years ago (!), I blogged about some eye-popping tech that promised interactive control over portrait lighting:

I was of course incredibly eager to get it into Photoshop—but alas, it’d take years to iron out the details. Numerous projects have reached the market (see the whole big category here I’ve devoted to them), and now with “Light Touch,” Adobe is promising even more impressive & intuitive control:

This generative AI tool lets you reshape light sources after capture — turning day to night, adding drama, or adjusting focus and emotion without reshoots. It’s like having total control over the sun and studio lights, all in post.

Check it out:

If nothing else, make sure you see the pumpkin part, which rightfully causes the audience to go nuts. 🙂

He gets it.

Honestly, Ben Affleck actually knowing AI and the landscape caught me off guard, but as a writer, makes sense.

Great takes across the board. pic.twitter.com/IcPe0n9302

— Forrest (@ForrestPKnight) January 17, 2026

I keep finding myself thinking of this short essay from Daniel Miessler:

Think very carefully about where you get help from AI.

I think of it as Job vs. Gym.

- If we’re working a manual labor job, it’s fine to have AI lift heavy things for us because the actual goal is to move the thing, not to lift it.

- This is the exact opposite of going to the gym, where the goal is to lift the weight, not to move it.

He argues for identifying gym tasks (e.g. critical thinking, problem solving), and for those use just your brain (with minimal AI assistance, if any).

My primary metric for this is whether or not I am getting sharper at the skills that are closest to my identity.

The whole essay (2-min read) is worth checking out.

Less prompting, more direct physicality: that’s what we need to see in Photoshop & beyond.

As an example, developer apolinario writes, “I’ve built a custom camera control @gradio component for camera control LoRAs for image models Here’s a demo of @fal’s Qwen-Image-Edit-2511-Multiple-Angles-LoRA using the interactive camera component”:

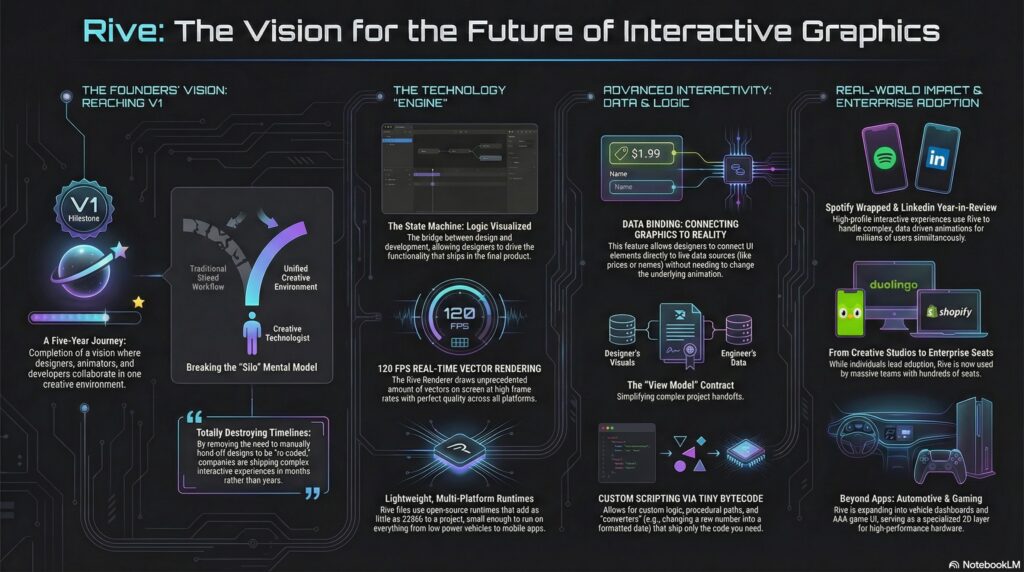

Having gotten my start in Flash 2.0 (!), and having joined Adobe in 2000 specifically to make a Flash/SVG authoring tool that didn’t make me want to walk into the ocean, I felt my cold, ancient Grinch-heart grow three sizes listening to Guido and Luigi Rosso—the brother founders behind Rive—on the School of Motion podcast:

[They] dig into what makes this platform different, where it’s headed, and why teams at Spotify, Duolingo, and LinkedIn are building entire interactive experiences with it!

Here’s a NotebookLM-made visualization of the key ideas:

Table of contents:

Reflecting on 2025: A Year of Milestones 00:24

The Challenges of a Three-Sided Marketplace 02:58

Adoption Across Designers, Developers, and Companies 04:11

The Evolution of Design and Development Collaboration 05:46

The Power of Data Binding and Scripting 07:01

Rive’s Impact on Product Teams and Large Enterprises 09:18

The Future of Interactive Experiences with Rive 12:36

Understanding Rive’s Mental Model and Scripting 24:32

Comparing Rive’s Scripting to After Effects and Flash

The Vision for Rive in Game Development 31:30

Real-Time Data Integration and Future Possibilities 40:26

Spotify Wrapped: A Showcase of Rive’s Potential 42:08

Breaking Down Complex Experiences 46:18

Creative Technologists and Their Impact 51:07

The Future of Rive: 3D and Beyond 59:30

Opportunities for Motion Designers with Rive 1:11:38

Sigh… I knew this nostalgia would come. “The way we werrrrre…” 🙂

Nano Banana & Flux are great & all, but I legit miss when DALL•E was gakked up on mescaline. pic.twitter.com/JBxPanywoF

— John Nack (@jnack) January 14, 2026

As AI continues to infuse itself more deeply into our world, I feel like I’ll often think of Paul Graham’s observation here:

Paul Graham on why you shouldn’t write with AI:

“In preindustrial times most people’s jobs made them strong. Now if you want to be strong, you work out. So there are still strong people, but only those who choose to be. It will be the same with writing. There will… pic.twitter.com/RWGZeJetUp

— Kieran Drew (@ItsKieranDrew) December 25, 2025

I initially mistook this tech as text->layers, but it’s actually image->layers. Having said that, if it works well, it might be functionally similar to direct layer output. I need to take it for a spin!

We’re finally getting layers in AI images.

The new Qwen Image Layered LoRA allows you to decompose any image into layers – which means you can move, resize, or replace an object / background.

This is Photoshop-grade editing, offered as an open source model pic.twitter.com/AIsD9GAtIw

— Justine Moore (@venturetwins) December 29, 2025

“It’s not that you’re not good enough, it’s just that we can make you better.”

So sang Tears for Fears, and the line came to mind as the recently announced PhotaLabs promised to show “your reality, but made more magical.” That is, they create the shots you just missed, or wish you’d have taken:

Honestly, my first reaction was “ick.” I know that human memory is famously untrustworthy, and photos can manipulate it—not even through editing, but just through selective capture & curation. Even so, this kind of retroactive capture seems potentially deranging. Here’s the date you wish you’d gone on; here’s the college experience you wish you’d had.

I’m reminded of the Nathaniel Hawthorne quote featured on the Sopranos:

No man for any considerable period can wear one face to himself, and another to the multitude, without finally getting bewildered as to which may be the true.

Like, at what point did you take these awkward sibling portraits…?

We all need an awkward ’90s holiday photoshoot with our siblings.

If you missed the boat (like I did), you’re in luck – I wrote some prompts you can use with Nano Banana Pro

Upload a photo of each person and then use the following:

“An awkward vintage 1990s studio… pic.twitter.com/LDbl2aQFPp

— Justine Moore (@venturetwins) December 24, 2025

And, hey, darn if I can resist the devil’s candy: I wasn’t able to capture a shot of my sons together with their dates, so off I went to a combo of Gemini & Ideogram. I honestly kinda love the results, and so down the cognitive rabbit hole I slide… ¯\_(ツ)_/¯

Of course, depending on how far all this goes, the following tweet might prove to be prophetic:

Modern day horror story where you look though the photo albums of you as a kid and realize all the pictures have this symbol in the corner pic.twitter.com/dHnUrUJs0r

— gabe (@allgarbled) December 21, 2025

Hey gang—thanks for being part of a wild 2025, and here’s to a creative year ahead. Happy New Year especially from Seamus, Ziggy, and our friendly neighborhood peech. 🙂

My new love language is making unsought Happy New Year images of friends’ dogs. (HT to @NanoBanana, @ChatGPTapp, and @bfl_ml Flux.)

Happy New Year, everyone! pic.twitter.com/nF2TfE4bQN

— John Nack (@jnack) December 31, 2025

Sorry-not-sorry to be a bit provocative, but seriously, to highlight one of one million examples:

Testing fence removal on my son’s photo using @NanoBanana, @ChatGPTapp, and @bfl_ml.

They’re all impressive, but Nano tried to put jet engines on this prop plane, so I’m giving this round to ChatGPT. pic.twitter.com/DOvZQLT5H5

— John Nack (@jnack) December 23, 2025

And in a slightly more demanding case:

For Christmas my wife requested a portrait of our coked-up puppy—so say hello to my little friend: pic.twitter.com/uyFfc7ZDzU

— John Nack (@jnack) December 25, 2025

For the latter, I used Photoshop to remove a couple of artifacts from the initial Scarface-to-puppy Nano Banana generation, and to resize the image to fit onto a canvas—but geez, there’s almost no world where I’d now think to start in PS, as I would’ve for the last three decades.

Back in 2002, just after Photoshop godfather Mark Hamburg left the project in order to start what became Lightroom, he talked about how listening too closely to existing customers could backfire: they’ll always give you an endless list of nerdy feature requests, but in addressing those, you’ll get sucked up the complexity curve & end up focusing on increasingly niche value.

Meanwhile disruptive competitors will simply discard “must-have” features (in the case of Lightroom, layers), as those had often proved to be irreducibly complex. iOS did this to macOS not by making the file system easier to navigate, but by simply omitting normal file system access—and only later grudgingly allowing some of it.

Steve Jobs famously talked about personal computers vs. mobile devices in terms of cars vs. trucks:

Obviously Photoshop (and by analogy PowerPoint & Excel & other “indispensable” apps) will stick around for those who genuinely need it—but generative apps will do to Photoshop what (per Hamburg) Photoshop did to the Quantel Paintbox, i.e. shove it up into the tip of the complexity/usage pyramid.

Adobe will continue to gamely resist this by trying to make PS easier to use, which is fine (except of course where clumsy new affordances get in pros’ way, necessitating a whole new “quiet mode” just to STFU!). And—more excitingly to guys like me—they’ll keep incorporating genuinely transformative new AI tech, from image transformation to interactive lighting control & more.

Still, everyone sees what’s unfolding, and “You cannot stop it, you can only hope to contain it.” Where we’re going, we won’t need roads.

…you waste pass the time screwing around doing competitive AI model featuring the building’s baffling architecture…

Round 2 pic.twitter.com/zSGHVL6aPL

— John Nack (@jnack) December 20, 2025

…and sketchy chow:

More in-hospital @NanoBanana vs. ChatGPT testing:

“Please create a funny infographic showing a cutaway diagram for the world’s most dangerous hospital cuisine: chicken pot pie. It should show an illustration of me (attached) gazing in fear…” pic.twitter.com/txnuamvGVq

— John Nack (@jnack) December 20, 2025

Great to see that he has a good sense of humor about it. 🙂

@bbcradio1 james cameron on being haunted by ryan gosling’s snl papyrus sketch #avatar #jamescameron #ryangosling ♬ original sound – BBC Radio 1

And just for old times’ sake—’cause it’s to keep a good font down; even harder with a bad one!

This seems like the kind of specific, repeatable workflow that’ll scale & create a lot of real-world value (for home owners, contractors, decorators, paint companies, and more). In this thread Justine Moore talks about how to do it (before, y’know, someone utterly streamlines it ~3 min from now!):

I figured out the workflow for the viral AI renovation videos

You start with an image of an abandoned room, and prompt an image model to renovate step-by-step.

Then use a video model for transitions between each frame.

Or…just use the @heyglif agent! How to + prompt https://t.co/ic4grWEysk pic.twitter.com/kSyZmd9v82

— Justine Moore (@venturetwins) December 16, 2025

Well, after years and years of trying to make it happen, Google has now shipped the ability to upload a selfie & see yourself in a variety of outfits. You can try it here.

U.S. shoppers, say goodbye to bad dressing room lighting. You can now use Nano Banana (our Gemini 2.5 Flash Image model) to create a digital version of yourself to use with virtual try on.

Simply upload a selfie at https://t.co/OeY1NiEMDZ and select your usual clothing size to… pic.twitter.com/Am0GiQSNg8

— Google (@Google) December 12, 2025

At least in my initial tests, results were kinda weird & off-putting:

I mean, obviously this ancient banger (courtesy of Bryan O’Neil Hughes, c.2003) is the only correct rendering! 🙂

That’s it. That’s the post. Happy Monday. 🙂

As I’m fond of noting, only thing more incredible than witchcraft like this is just how little notice people now take of it. ¯\_(ツ)_/¯ But Imma keep noticing!

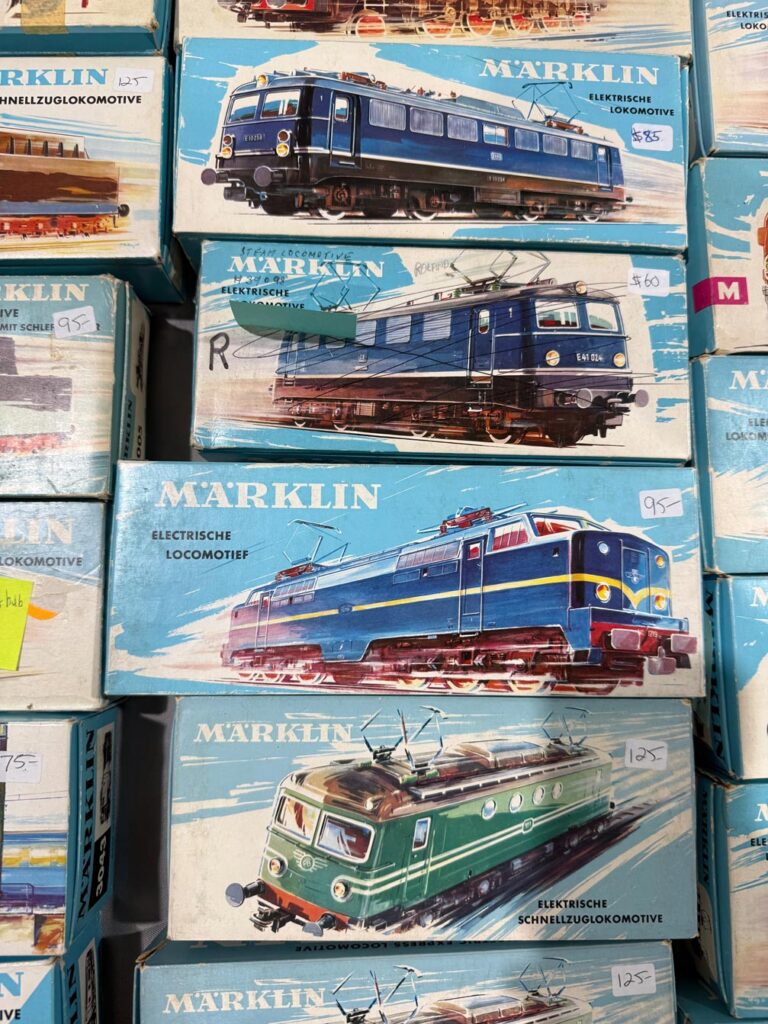

Two years ago (i.e. an AI eternity, obvs), I was duly impressed when, walking around a model train show with my son, DALL•E was able to create art kinda-sorta in the style of vintage boxes we beheld:

Seeing a vintage model train display, I asked it to create a logo on that style. It started poorly, then got good. pic.twitter.com/v7qL8Xnqpp

— John Nack (@jnack) November 12, 2023

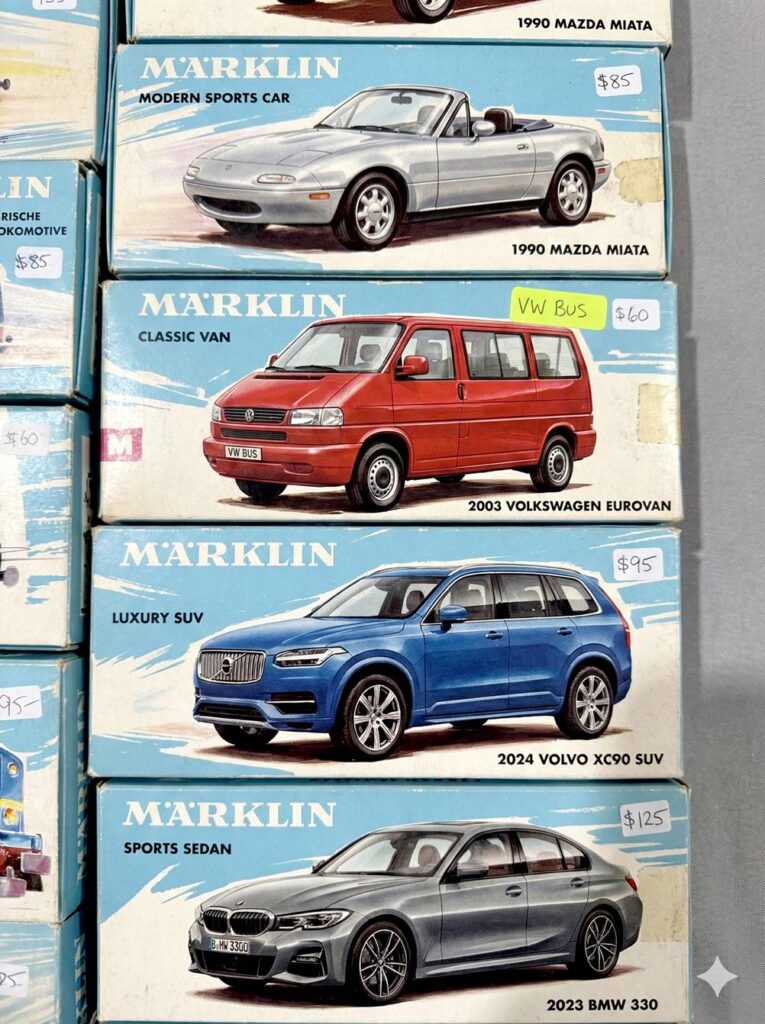

I still think that’s amazing—and it is!—but check out how far we’ve come. At a similar gathering yesterday, I took the photo below…

…and then uploaded it to Gemini with the following prompt: “Please create a stack of vintage toy car boxes using the style shown in the attached picture. The cars should be a silver 1990 Mazda Miata, a red 2003 Volkswagen Eurovan, a blue 2024 Volvo XC90, and a gray 2023 BMW 330.” And boom, head shot, here’s what it made:

I find all this just preposterously wonderful, and I hope I always do.

As Einstein is said to have remarked, “There are only two ways to live your life: one is as though nothing is a miracle, the other is as though everything is.”

Jesús Ramirez has forgotten, as the saying goes, more about Photoshop than most people will ever know. So, encountering some hilarious & annoying Remove Tool fails…

.@Photoshop AI fail: trying to remove my sons heads (to enable further compositing), I get back… whatever the F these are. pic.twitter.com/U8WtoUh2qK

— John Nack (@jnack) December 8, 2025

…reminded me that I should check out his short overview on “How To Remove Anything From Photoshop.”

This season my alma mater has been rolling out sport-specific versions of the classic leprechaun logo, and when the new basketball version dropped today, I decided to have a little fun seeing how well Nano Banana could riff on the theme.

My quick take: It’s pretty great, though applying sequential turns may cause the style to drift farther from the original (more testing needed).

I dig it. Just for fun, I asked Google’s @NanoBanana to create more variations for other sports: pic.twitter.com/i3CBTr8bpp

— John Nack (@jnack) December 9, 2025

I generally love shallow depth of field & creamy bokeh, but this short overview makes a compelling case for why Spielberg has almost always gone in the opposite direction:

Interesting—if not wholly unexpected—finding: People dig what generative systems create, but only if they don’t know how the pixel-sausage was made. ¯\_(ツ)_/¯

AI created visual ads got 20% more clicks than ads created by human experts as part of their jobs… unless people knew the ads are AI-created, which lowers click-throughs to 31% less than human-made ads

Importantly, the AI ads were selected by human experts from many AI options pic.twitter.com/EJkZ1z05FO

— Ethan Mollick (@emollick) December 6, 2025

…you give back flying lighthouses (duh!).

(made with @grok) pic.twitter.com/nGWMLK170Z

— John Nack (@jnack) December 1, 2025

Seriously, its mind (?) is gonna be blown. 🙂

“No one on X is calling anything ‘Nano Banana’ or ‘Gemini 2.5 Flash Image’ in any consistent or meaningful way.”

I’m not so sure I agree 100% with your policework there, @Grok… pic.twitter.com/P5kGOfg5Ln

— John Nack (@jnack) December 4, 2025

Here’s some actual data for relative interest in Nano Banana, Flux, Midjourney, and Ideogram:

That’s my core takeaway from this great conversation, which will give you hope.

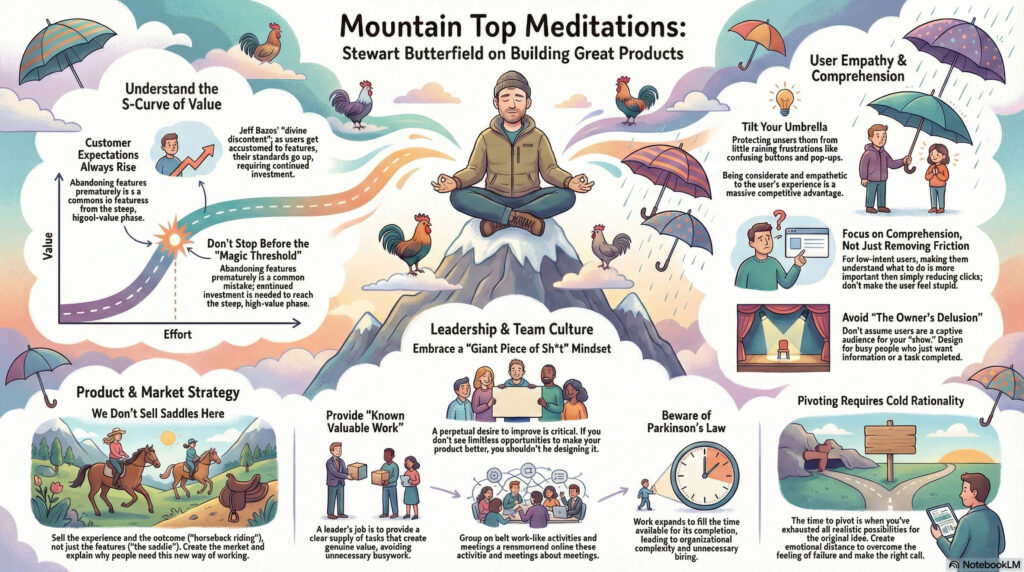

Slack & Flickr founder Stewart Butterfield, whose We Don’t Sell Saddles Here memo I’ve referenced countless times, sat down for a colorful & wide-ranging talk with Lenny Rachitsky. For the key points, check out this summary, or dive right into the whole chat. You won’t regret it.

Visual summary courtesy of NotebookLM:

(00:00) Introduction to Stewart Butterfield

(04:58) Stewart’s current life and reflections

(06:44) Understanding utility curves

(10:13) The concept of divine discontent

(15:11) The importance of taste in product design

(19:03) Tilting your umbrella

(28:32) Balancing friction and comprehension

(45:07) The value of constant dissatisfaction

(47:06) Embracing continuous improvement

(50:03) The complexity of making things work

(54:27) Parkinson’s law and organizational growth

(01:03:17) Hyper-realistic work-like activities

(01:13:23) Advice on when to pivot

(01:18:36) The importance of generosity in leadership

(01:26:34) The owner’s delusion

Being crazy-superstitious when it comes to college football, I must always repay Notre Dame for every score by doing a number of push-ups equivalent to the current point total.

In a normal game, determining the cumulative number of reps is pretty easy (e.g. 7 + 14 + 21), but when the team is able to pour it on, the math—and the burn—get challenging. So, I used Gemini the other day to whip up this little counter app, which it did in one shot! Days of Miracles & Wonder, Vol. ∞.

Introduced my son to vibe coding with @GeminiApp by whipping up a push-up counter for @NDFootball. (RIP my pecs!) #GoIrish

Try it here: https://t.co/fjEnLvTRFK pic.twitter.com/B1YhiNmWSk

— John Nack (@jnack) November 22, 2025

There’s almost no limit to my insane love of practical animal puppetry (usually the sillier, the better—e.g. Triumph, The Falconer), so I naturally loved this peek behind the scenes of Apple’s new spot:

Puppeteers dressed like blueberries. Individually placed whiskers. An entire forest built 3 feet off the ground. And so much more.

Bonus: Check out this look into the making of a similarly great Portland tourism commercial:

I can’t think of a more burn-worthy app than Concur (whose “value prop” to enterprises, I swear, includes the amount they’ll save when employees give up rather than actually get reimbursed).

That’s awesome!

Given my inability to get even a single expense reimbursed at Microsoft, plus similar struggles at Adobe, I hope you won’t mind if I get a little Daenerys-style catharsis on Concur (via @GeminiApp, natch). pic.twitter.com/128VExTDoS

— John Nack (@jnack) November 22, 2025

How well can Gemini make visual sense of various famous plots? Well… kind of well? 🙂 You be the judge.

“The Dude Conceives” — Testing @GeminiApp + @NanoBanana to visually explain The Big Lebowski, Die Hard, Citizen Kane, and The Godfather.

I find the glitches weirdly charming (e.g. Bunny Lebowski as actual bunny!). pic.twitter.com/dT3X3423Ee

— John Nack (@jnack) November 24, 2025